A shout out to Jacques Marneweck at Kaizen Garden. Jacques has been helping TextDrive refugees such as myself recover our data after TextDrive went dark.

Jacques is one of the good guys.

A shout out to Jacques Marneweck at Kaizen Garden. Jacques has been helping TextDrive refugees such as myself recover our data after TextDrive went dark.

Jacques is one of the good guys.

In the process of writing Jekyll on Github Pages, I became quite proficient in deploying a Jekyll blog to GitHub pages. Here is how I did it:

Create a github repository names username.github.io, where username is your GitHub username as described in the Github Pages documentation.

Create a new jekyll blog on your local machine 1

2sites/jekyll 1 $ jekyll new github.pages

New jekyll site installed in /Projects/sites/jekyll/github.pages.

Initialize your jekyll configuration. Note that I’ve added support for Git Flavored Markdown in the kramdown configuration. 1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16sites/jekyll 2 $ cd github.pages

jekyll/github.pages 3 $ vi _config.yml

jekyll/github.pages 4 $ cat _config.yml

# Site settings

title: Getting Started with Jekyll

email:

description: "Sample Jekyll blog hosted on GitHub pages"

baseurl: ""

url: http://ideoplex.github.io

# Build settings

markdown:

kramdown

kramdown:

input: GFM

permalink: pretty

Delete the default first post created by jekyll 1

jekyll/github.pages 5 $ rm _posts/201?-??-??-welcome-to-jekyll.markdown

Create your own first post. My first post contains these instructions. 1

jekyll/github.pages 6 $ vi _posts/2014-05-14-upload-to-github-pages.md

Add a README.me 1

2

3jekyll/github.pages 7 $ echo '#Deploy a Jekyll blog to GitHub pages

Example of a Jekyll blog deployed on GitHub pages' > README.md

Initialize your local git repository: 1

2jekyll/github.pages 8 $ git init

Initialized empty Git repository in /Projects/sites/jekyll/github.pages/.git/

Add everything and make your first commit 1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18jekyll/github.pages 9 $ git add --all

jekyll/github.pages 10 $ git commit -m "first commit"

[master (root-commit) 223ac2c] first commit

14 files changed, 710 insertions(+)

create mode 100644 .gitignore

create mode 100644 README.md

create mode 100644 _config.yml

create mode 100644 _includes/footer.html

create mode 100644 _includes/head.html

create mode 100644 _includes/header.html

create mode 100644 _layouts/default.html

create mode 100644 _layouts/page.html

create mode 100644 _layouts/post.html

create mode 100644 _posts/2014-05-14-upload-to-github-pages.md

create mode 100644 about.md

create mode 100644 css/main.css

create mode 100644 feed.xml

create mode 100644 index.html

Set your github pages repository as the remote, and push 1

2

3

4

5

6

7

8

9

10jekyll/github.pages 11 $ git remote add origin https://github.com/ideoplex/ideoplex.github.io.git

jekyll/github.pages 12 $ git push -u origin master

Counting objects: 20, done.

Delta compression using up to 8 threads.

Compressing objects: 100% (18/18), done.

Writing objects: 100% (20/20), 7.82 KiB | 0 bytes/s, done.

Total 20 (delta 1), reused 0 (delta 0)

To https://github.com/ideoplex/ideoplex.github.io.git

* [new branch] master -> master

Branch master set up to track remote branch master from origin.

Congratulations, your blog will shortly be available on GitHub pages.

I’m happy with hexo, but it never hurts to check out the competition. I recently ran across Prose:

Prose is an open source web-based authoring environment for CMS-free websites and designed for managing content on GitHub. …

Which re-aroused my interest in Jekyll and GitHub Pages. If Prose was able to deliver, then the combination of Prose, Jekyll, and GitHub pages was going to be tough to beat.

Unfortunately, Prose isn’t quite there yet. I had problems with the front-matter section. But it is almost there. It is definitely worth keeping an eye on.

My first month on S3 cost about $0.60. That includes $0.51 for DNS hosting on Route 53.

My decision to use S3 is looking pretty good.

The more things change, the more things stay the same.

I was tweaking my Nitrous.IO box when it occurred to me that cloud computing is bringing the command line back into primacy. I know you can get a MS Windows box in the cloud. And I know that many apps come with a decent http admin portal. But there are a lot of people talking to Linux boxes via ssh. The command line and I go way back, so Nitrous.IO felt right at home.

Almost like home. I’m a long time zsh user, so I was happy to see that Nitrous.IO included zsh 5.0.5 in their autoparts package manager. Unfortunately, command completion was not working. A quick fix was easy enough (upload the required files and add to fpath) but seemed a bit selfish. Autoparts is open source, so I took a closer look.

The zsh.rb package definition looked ok. I played around with things just to make sure. No joy. Then I realized that either Nitrous.IO was providing seriously fast boxes or zsh was not being built from source. A little more poking around and I found an autoparts option to install from source:

parts install zsh --source

And voilà. Completion was working.

The only problem with a static site is that you have to generate your site somewhere. I wanted the option to blog on the go.

I was prepared to provision a server in the cloud and spin it up as necessary. But then I ran across Nitrous.IO. A box in the cloud with a Web IDE and ssh access. Serendipity is a sweet thing.

I took Nitrous for a spin and it was exactly what I needed. They have standard templates for Ruby, Node.js, Python, Go or PHP servers and a package manager to add things like Ack, s3cmd and Zsh to your server.

It is a good security practice to use the least amount of privilege required. So I followed Keita’s instructions on granting user access to a S3 bucket to create a new user that can only access a single bucket in S3. Because my AWS usage is only S3 and Route53, I also gave this user access to AWS billing. That is a minor violation of least privilege, but the increased convenience well worth the small decrease in security.

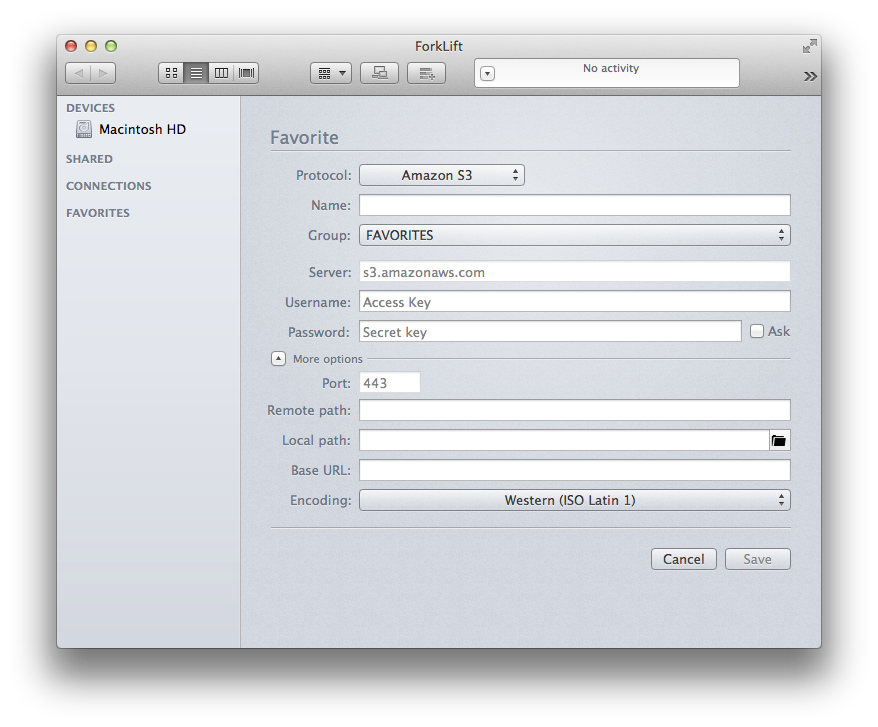

There are plenty of options for copying files to S3. It is important that your choice sets the content-type correctly. I use Forklift from binarynights to copy my files to Amazon S3. If you add the site as a Favorite from the Favorites pane, then you have the option to save the secret key.

Just because you’re paranoid doesn’t mean they aren’t after you

Joseph Heller

Maybe I shouldn’t advertise, but I have definite tin foil hat tendencies. So I’ve been following the Heartbleed Bug pretty closely. This is scary stuff. An important piece of infrastructure that we all trusted turned out to be untrustworthy.

Security conflicts with convenience. That applies to users and developers alike. Users make their own choices and take their own chances. But developers make choices on behalf of their users. They need to have an appropriate level of paranoia on their behalf as well.

I needed a new home after my two lifetimes ran out. I quickly realized that I had some problems:

Now I’m back and better than ever, courtesy of Amazon S3 and Route53. It was the best combination of high quality, small scope, and low cost out there. The pay as you go model just works.

If you want to do this yourself, here are some helpful links:

You don’t buy an apartment to make money. You buy it to make a home, a place of your own.

I didn’t become one of the TextDrive VC200, add the Mixed Grill and a lifetime accelerator to save money. I joined to establish my home on the web. A place to which I could always return.

That’s part of what TextDrive took away from me. I thought I had a home for life.

Other members of the VC200 may have received good value on their investment. But I never had more than one website and I never launched a live application on my accelerator. But I always knew that I could.

I suppose that I should have known that commercial lifetime isn’t the lifetime of the seller or the buyer, but the lifetime of the product being sold. But I somehow thought it would be different this time. That a medium in which people were brands and reputation mattered would be different.

That’s another thing that TextDrive tried to take away from me. I’m hoping that they failed.